What is Cloud Compression for Live Volumetric Video?

This project is focused on the delivery of live immersive experiences to end users.

Condense Reality will look at the novel application of deep learning to efficiently compress live-streamed volumetric video in order to host live music events in the metaverse.

This project will focus on:

- Novel methods that employ deep learning to improve compression efficiency.

- Enhanced architectures for delivering volumetric video content at variable bitrates.

- Integrating systems into the decoder which dynamically influence the bitrate based on bandwidth and device capability (updating the Unity and Unreal plugins so that they can cope with whatever compression methodology is adopted).

Through this, they will provide the following benefits to the user:

- Enhanced visual quality and immersive experience associated with volumetric video content

- Reduced end-to-end latency of the compression systems

- Improved unit economics of delivery

- Increased content accessibility

This project will make a major contribution to the state-of-the-art in volumetric video delivery and generate a significant impact on a wide range of immersive video applications.

Bristol has a unique ecosystem of researchers, technology companies and artists that can be at the forefront of defining and creating a future collaborative Metaverse. The world-leading innovations in this project will contribute to this ambition and thus bring significant economic benefits to the region.

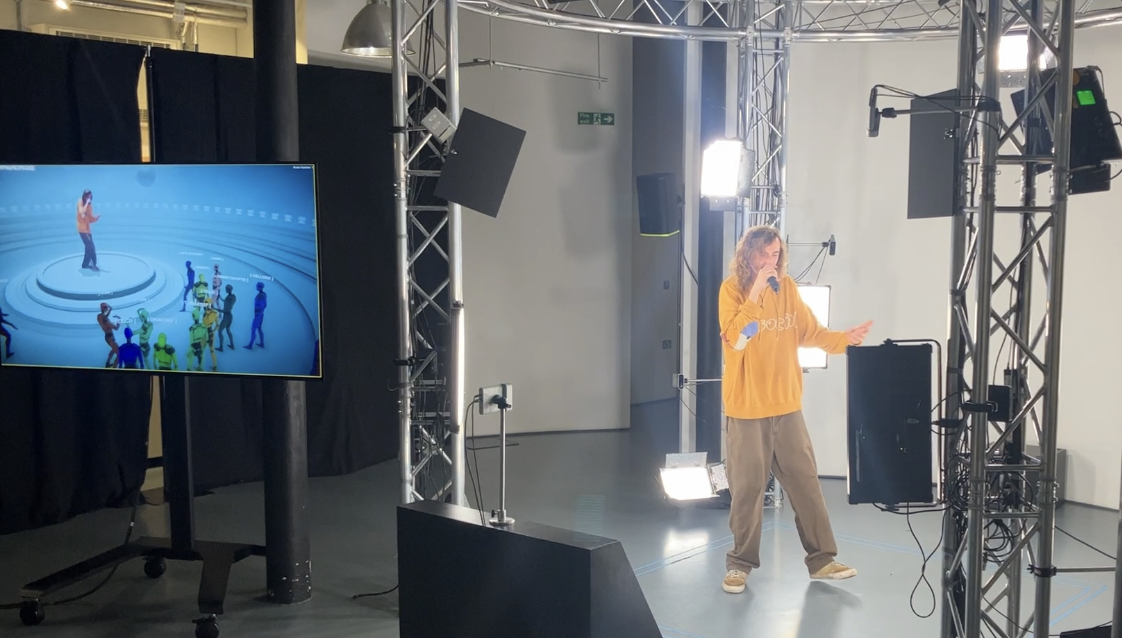

An insight into Condense Reality

Condense is a Bristol-based startup founded in 2019. It currently has 24 employees and is based at the Watershed where it has its own Metaverse Studio. Condense has developed a system for capturing and streaming live volumetric video into Game Engines. This allows them to host ticketed events in virtual spaces that can hugely expand the addressable market for live music.

More about the collaboration

The University of Bristol is recognised as a world leader in AI-based video coding, with contributions to MPEG standards including new training databases, coding frameworks, and deep learning network architectures. In this collaborative project, researchers within the Visual Information Laboratory will contribute expertise and enhance their state-of-the-art coding approaches to volumetric video content