Celestial Live is an R&D project to extend the capabilities of South-West based drone show company, Celestial. This successful start-up was founded in 2019 and has produced spectacular light shows for events such as London’s New Year fireworks display and the Eurovision Song Contest, and have had clients such as Amazon and Greenpeace.

Currently, drone shows are produced with a non-realtime model where animations are made in advance, exported in a laborious process to individual drones, with the show itself being entirely pre-programmed and fixed. Celestial Live makes an alternate model possible, where drones can be controlled interactively, in real time. This opens up many exciting creative possibilities: a fleet of drones can be integrated into an audio-visual show, react to music, respond to the movements of performers and even audiences.

Celestial Live is a collaboration between Celestial and Bath Spa University, who have an established track record in extended audio-visual practice, particularly around the work of Professor Joseph Hyde and the long-running Seeing Sound symposium and festival. Over the course of the project, Hyde has worked with animation and technical teams at Celestial (led by Head of Animation Anthony Head and supported by Project Manager Mark Brenchley) to develop a complex and sophisticated framework for the live control of a fleet of drones, using a variety of hardware and software. It was framed between two workshops at Bath Spa University at the start and end of the project, and an extended development period in between.

Workshop 1

For the first workshop, held at BSU in April 2023, we developed what we called the ‘Virtual Instrument’ – a patch using Cycling74’s prototyping environment Max. The Virtual Instrument extracted a variety of musical parameters from an audio input – pitch, volume, and some analysis of musical timbre – and converted these parameters into compatible data to control Celestial’s (existing) Drone Composer software. We also developed another patch that was capable of rudimentary movement tracking (using simple computer vision techniques) to facilitate an equivalent degree of control in this domain.

We held this workshop in one of BSU’s music rehearsal spaces, with a select group of staff, PhDs and postgraduate students from the Music and Dance departments. We tried our prototype patch with a variety of musical instruments and voice, and also using dance-based movement as a basis. We used this to control a variety of very simple drone setups in the drone composer software to highlight a discussion on what was working and what was less effective, and had an extensive and fruitful discussion on what to develop further in future.

Main Development Period

During the Main Production Period, from April to December 2023, our primary aim was to take the functionality of the Virtual Instrument we developed for the workshop and to develop it into a fully-fledged system, robust and flexible enough for a wide variety of scenarios. To this end, we adopted a modular approach, based around an open-ended set of plugins rather than a single patch/app. We adopted popular Digital Audio Workstation (DAW) software Ableton Live as our platform and developed a series of plugins to integrate into this platform. We turned the audio and movement analysis patches we developed for the first workshop into a series of plugins, and supplemented this with additional plugins to allow input from devices such as MIDI controllers, lighting desks, etc., with the idea that additional plugins can be added to integrate other means of control in future.

During this phase we also developed a physical counterpart to the Virtual Instrument, by way of proposing what the ideal physical input device might be to control a drone show live. We considered building hardware from scratch but again instead adopted a modular and open-ended approach where we brought together a set of high-end but commercially-available devices and integrated them into a self-contained and portable unit.

Workshop 2

The second workshop took place in December 2023, and focused on the project from a creative technology angle. Again we recruited primarily postgraduate students and staff, but this time from Creative Computing, Games Development and Sound Production course. Our aim here was to explore the software and hardware ecosystem we had developed in depth, exploring various scenarios around potential drone shows and related applications, and to invite ideas for further development. This workshop was a little larger than the first one and took place in one of BSU’s flagship TV studios. It was accompanied by a short presentation to a wider audience to share the research outcomes of the project with a broader audience from the University.

Final Stages and Further Development

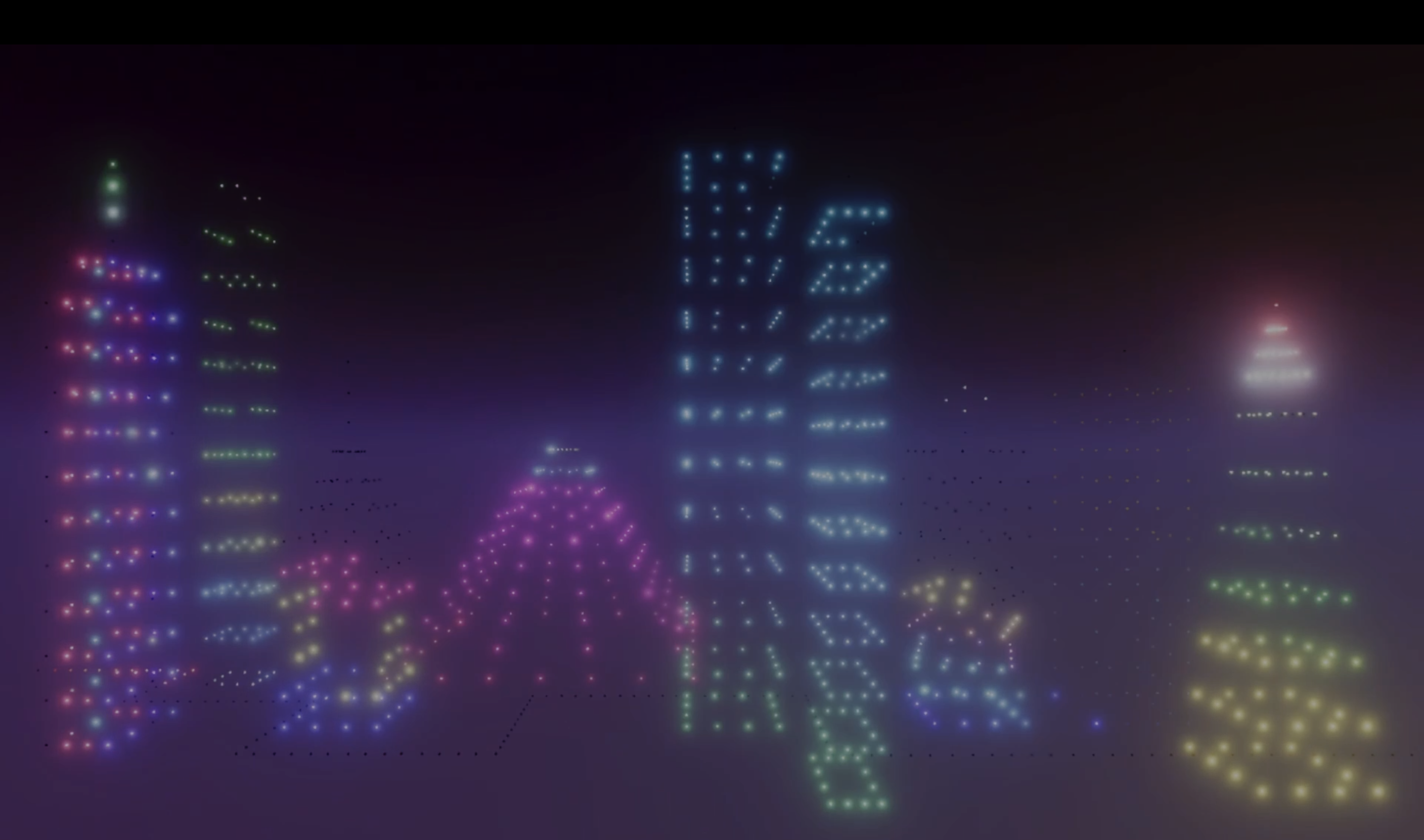

The project wrapped up in January with the demonstration of a 50-drone prototype drone show based around the Virtual and Physical Instruments, with original music from Joseph Hyde. This was presented alongside an interactive demo of these tools, and took place at Celestial’s base ‘The Hive’ near Bath.

The Celestial Live team are keen to continue development of these tools and techniques beyond the timeframe of the funded project and in particular to look at applications that can involve the full fleet of drones. We are currently looking for opportunities to support this, and welcome any enquiries! Contact Joseph Hyde at j.hyde@bathspa.ac.uk or Celestial at ah@celestial.show.