It seems that everyone wants games to start using generative AI – from players hungry for new experiences to investors hungry for new opportunities.

Everyone, that is, except for game devs… i.e. the people actually making games on the ground. Instead of new opportunities and experiences, they see a much bleaker picture: the loss of jobs, the decay of meaning, and the negation of creative control.

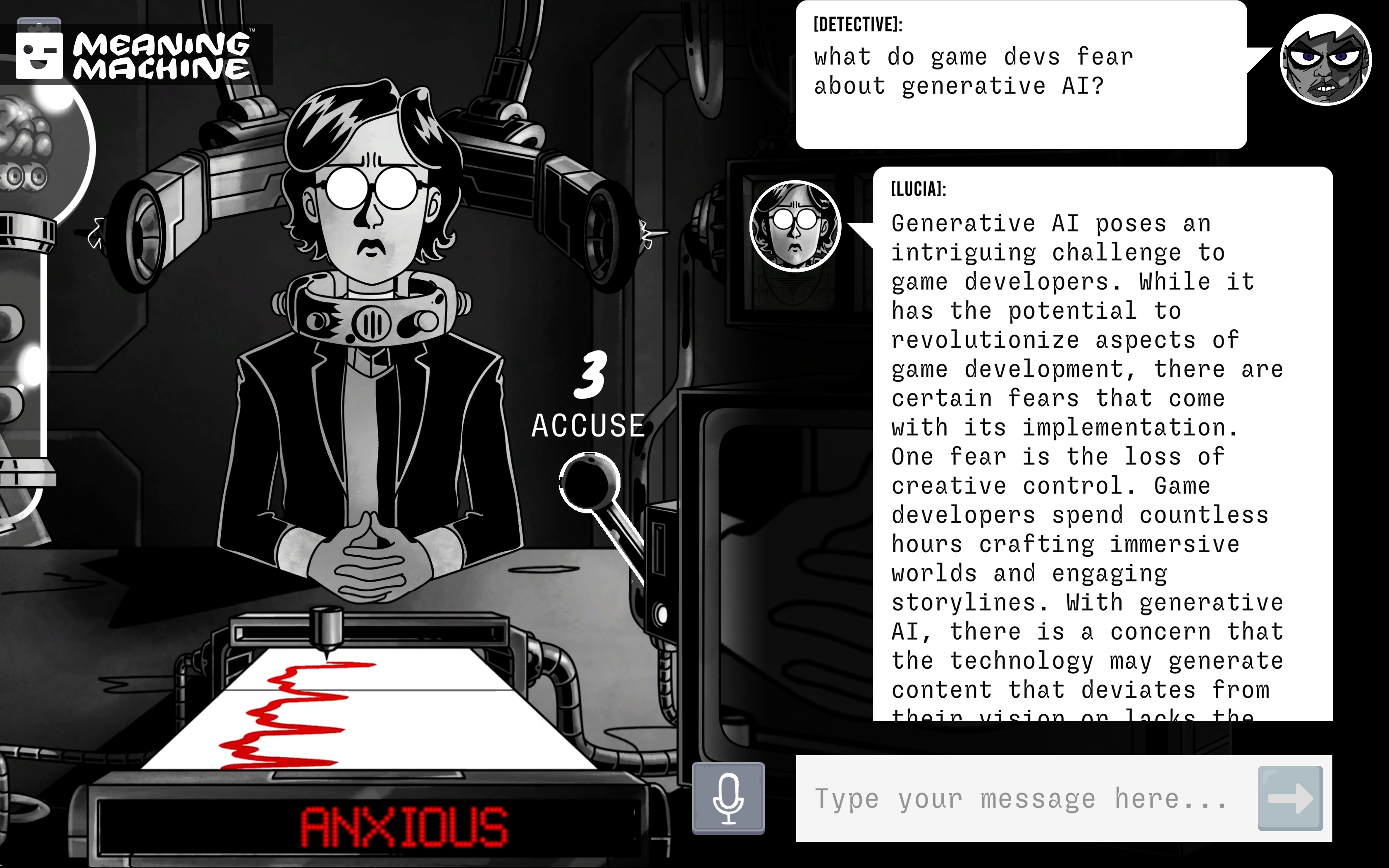

Crucially, we at Meaning Machine see these concerns as not just legitimate – but as central challenges that need to be addressed before generative AI can take root in video games. Moreover, we see these challenges as something that we can help with – as part of our work within the MyWorld programme with Digital Catapult and NVIDIA, to develop AI tooling for in-game characters and dialogue.

For us, the change starts with three strategies that flip the script on the current conversation around generative AI in video games. These strategies position creative people at the heart of an AI-enabled future, not a casualty of it – and empower devs to spearhead a bold new period of experimentation and self-expression, in which human-led creative vision matters more than ever.

Meaning Machine Co-founders Thomas Keane and Ben Ackland

Strategy 1: Author-directed Models

The fear of creative control being usurped by generative AI is a legitimate concern among game developers. The general models that have made headlines (e.g. GPT) give creators very limited levers of power, and instead rely on their own learned logic to free-wheel content into game worlds. And so it’s perhaps no wonder that existing players in the generative AI tech space use the UI metaphor of a “magic wand”: input a couple of parameters, click the magic wand, get lots of content! But what these companies fail to understand is that this is the opposite of what developers want.

Developers want control over their game worlds. They have an authorial vision – something they want to say as humans, something that the game is a vehicle for communicating. And so, if generative AI is ever going to win over game devs, it needs to be in the form of tooling that enables them to enact that authorial vision.

Which is why we propose moving away from general AI models and towards “author-directed models” – that devs can train with their own content, and curate based on their bespoke gameplay scenarios. This shift gives creators tight control over the output – instead of the loose direction currently available on the market. We will be sharing more details of how this is achieved in due course.

Strategy 2: ‘Game Conscious’ ™️ Dialogue

In his keynote talk at Develop 2023, leading indie dev Jon Ingold critiqued AI for its inability to generate content in sync with the wider game dynamics. For him, “if the [generated content] doesn’t connect to, or resonate with, or inflect on the other lore in the game… it’s literally worthless.”

On one hand, this content is ‘worthless’ because it creates an inevitable disconnect with the lived experience of players. But from the devs’ perspective, it’s also an issue of authorial vision: if AI content cannot directly connect to what is happening in the game, then it cannot further the creative vision of the dev.

To counteract this ‘worthlessness’, we are developing tech that enables AI models to generate dialogue that is directly tied to real-time game worlds. We call this ‘Game Conscious’ ™️ Dialogue – and we will be sharing details of this unique technology after the conclusion of the MyWorld Challenge Call project. For us, this technology isn’t just ‘nice to have’, it’s a fundamental requirement. Because the ‘game-blind’ models currently in the market are clearly failing to create meaningful experiences.

Strategy 3: Jobs for Creative People

While the loss of creative control is a key concern for devs, their greatest concern is arguably that generative AI will take a scythe through the industry – and leave creative people without jobs. This is largely because early advocates of generative AI emphasise its power as a “time-saving” and “cost-cutting” tool. And it doesn’t take a genius to read between the lines of this promise: fewer people needed to do more work.

But we at Meaning Machine envision a very different future – a future unlocked by the technology we are building. This is because, in our experience, generative AI is only as good as the creative people who wield it. And so the pipelines we are designing ensure the involvement of creative people at every step, and over a long time period.

This means that using our tools won’t incentivise studio bosses to cut back on creative people – since it supercharges the value of those creative people. Profit will come about as a result, but it will be through the pursuit of bold new visions and player satisfaction, not cost-cutting.

Conclusion

When it comes to generative AI, the industry is on a knife-edge – as studio bosses study balance sheets, and tech bros lurk in the wings. But we’re optimistic.

As game devs who are actually using generative AI in our games, we believe in the creative potential of generative AI. We recognise the risk to creative work and creative people. But we believe that these risks can be overcome through technology that promotes the use of generative AI as a tool for human self-expression – with creators involved at every step of the way. This is the technology we’re building, and we’d love you to join us on the journey.

Follow us on Twitter (@meaningmachine_) for updates or reach out directly to Tom (tom@meaningmachine.games) or Ben (ben@meaningmachine.games).

Blog by Meaning Machine, Challenge Call project.